Deep Morphogenesis

[[{"fid":"364762","view_mode":"top","fields":{"format":"top","field_file_image_alt_text[und][0][value]":"Vandkunsten Diffusion model","field_file_image_title_text[und][0][value]":"Generated Images"},"type":"media","attributes":{"class":"media-element file-top"}}]]

Fine-Tuned Diffusion model

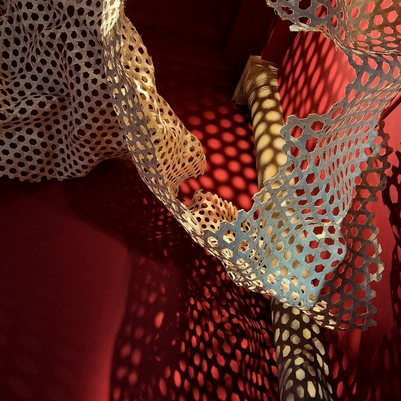

This project investigates potential influence of generative AIs on architecture practice using fine-tuned diffusion models. Enhancing conceptualization, communication, and iteration, these models offer architects new ways to visualize, share, and refine ideas. Deep Morphogenesis offers a glimpse into how emerging and future technologies might integrate into the architectural field.

[[{"fid":"364574","view_mode":"top","fields":{"format":"top","field_file_image_alt_text[und][0][value]":"","field_file_image_title_text[und][0][value]":""},"type":"media","attributes":{"class":"media-element file-top"}}]]

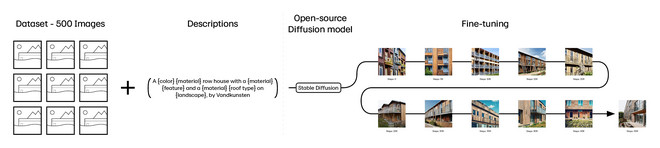

Text-to-image diffusion models (DMs) like Dall-E-2 and Imagen have achieved state-of-the-art results in image synthesis. These models have enabled anyone without prior training in visual artistry to synthesize images with photo-realistic qualities and aesthetic compositions using only natural language. Stable diffusion, an open-sourced has allowed the ability to fine-tune a diffusion model on a self-supplied dataset. Previous work on fine-tuning diffusion models outlines a fine-tuning methodology, but such fine-tuned models have yet to be evaluated in their ability to augment architectural workflows. In partnership with Vandkunsten, a fine-tuning of a diffusion model was performed with the firms’s database of previous work.

Fine-Tuning

To ensure a model produces high-quality outputs, a custom caption must be written for every image in a training dataset. As this is a time-consuming endeavour, the scope of the training dataset was limited to fifty high-density, low-rise projects by Vandkunsten. A selection of five hundred images with custom captions was used for the training of the diffusion model. The quality of the model outputs where evaluated by employing architects from Vandkunsten to partake in a image blindest.

Interfacing

As text prompts are the primary method of interfacing with a diffusion model, a study of the strength of individual words in producing architectural imagery was performed on Vandkunsten Diffusion. This was done with a scripted generation of hundreds of images by manipulating a set prompt with the removal or exchange of a single word. As such, the strength of individual words could be measured in influencing the quality of the output.

[[{"fid":"365025","view_mode":"top","fields":{"format":"top","field_file_image_alt_text[und][0][value]":"Controlnet","field_file_image_title_text[und][0][value]":"Input shapes"},"type":"media","attributes":{"class":"media-element file-top"}}]]

Input Image

A ability to supplement a prompt with an input image was also evaluated, specifically an open-source method called ControlNet. This method can use, among others, a line drawing or a depth map as a framework whereupon the model will synthesize an image. As this methodology can leverage the architectural modes of production, drawing, and modelling, a study was conducted on the effectiveness of ControlNet as a method of augmenting architectural workflows.

AI in Architecture

The adaptation of fine-tuned text-to-image diffusion models in architectural practice suggests promising enhancements across various domains. These models can aid in conceptualizing and communicating ideas with more clarity, especially for non-experts. While maintaining the essential practice of traditional architectural drawings, diffusion models offer a tool for generating design variations swiftly. Additionally, these models could augment proposal materials by creating comprehensive environmental representations and unique visual styles.

Rhino Plugin

Det Kongelige Akademi understøtter FN’s verdensmål

Siden 2017 har Det Kongelige Akademi arbejdet med FN’s verdensmål. Det afspejler sig i forskning, undervisning og afgangsprojekter. Dette projekt har forholdt sig til følgende FN-mål